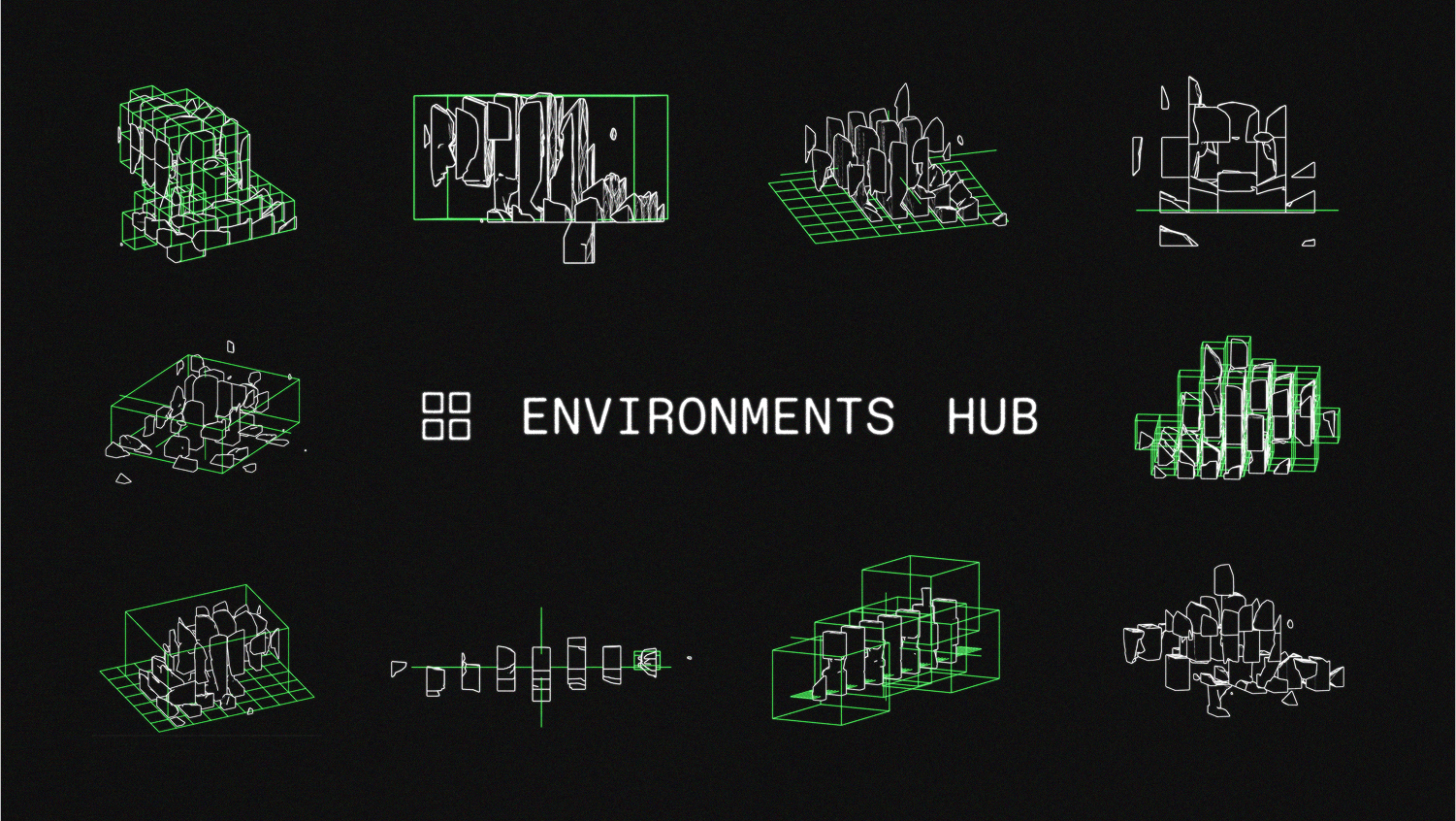

Environments Hub: A Community Hub To Scale RL To Open AGI

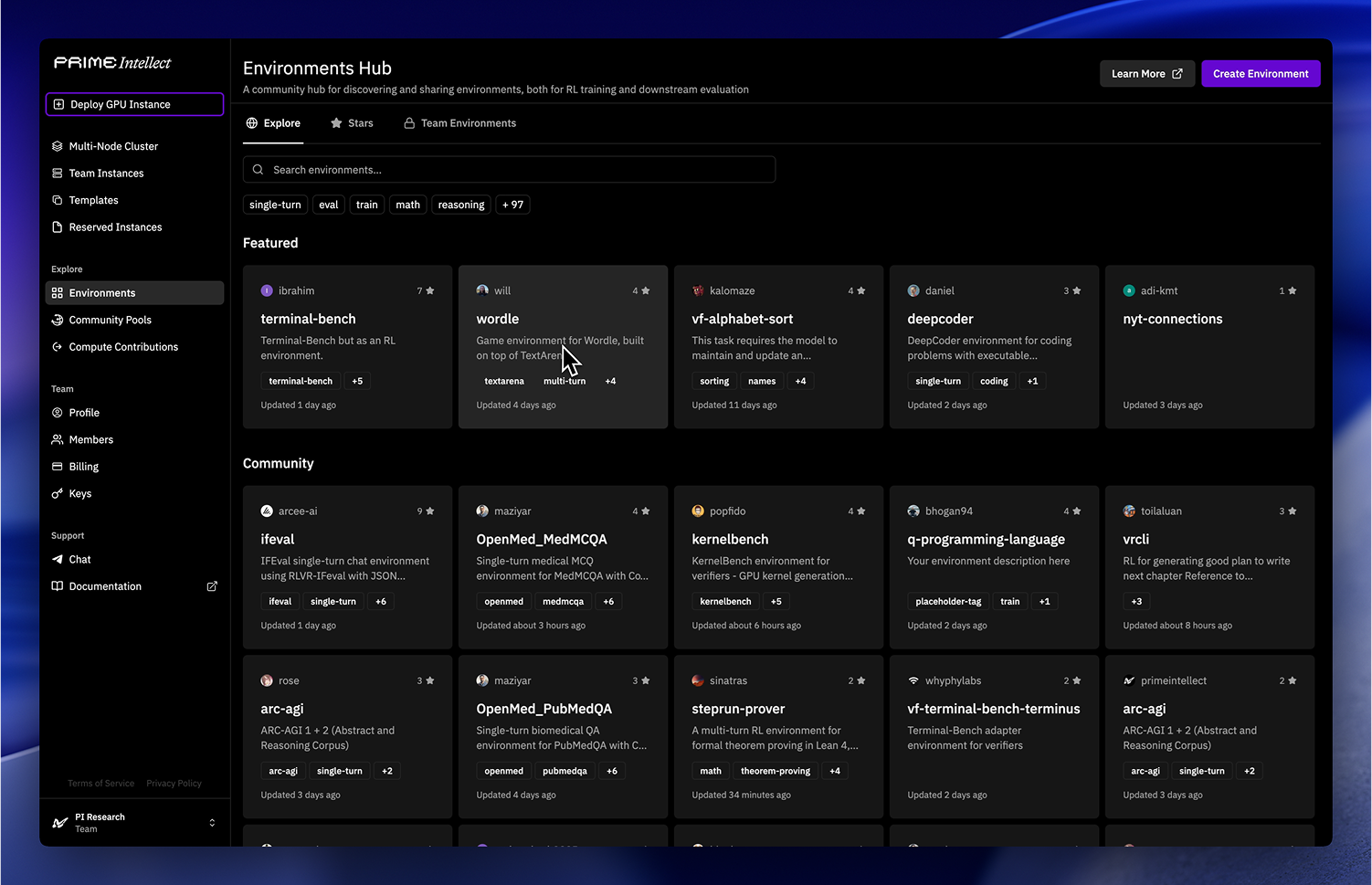

RL environments are the playgrounds where agents learn. Until now, they’ve been fragmented, closed, and hard to share. We are launching the Environments Hub to change that: an open, community-powered platform that gives environments a true home.

Environments define the world, rules and feedback loop of state, action and reward. From games to coding tasks to dialogue, they’re the contexts where AI learns, without them, RL is just an algorithm with nothing to act on.

Environments sit at the center of current AI progress. Each new one expands what we can train, study, and evaluate, making open models more competitive. By lowering the friction to build, share, and reuse environments, the Hub enables anyone in the world to contribute directly to open-source AGI progress.

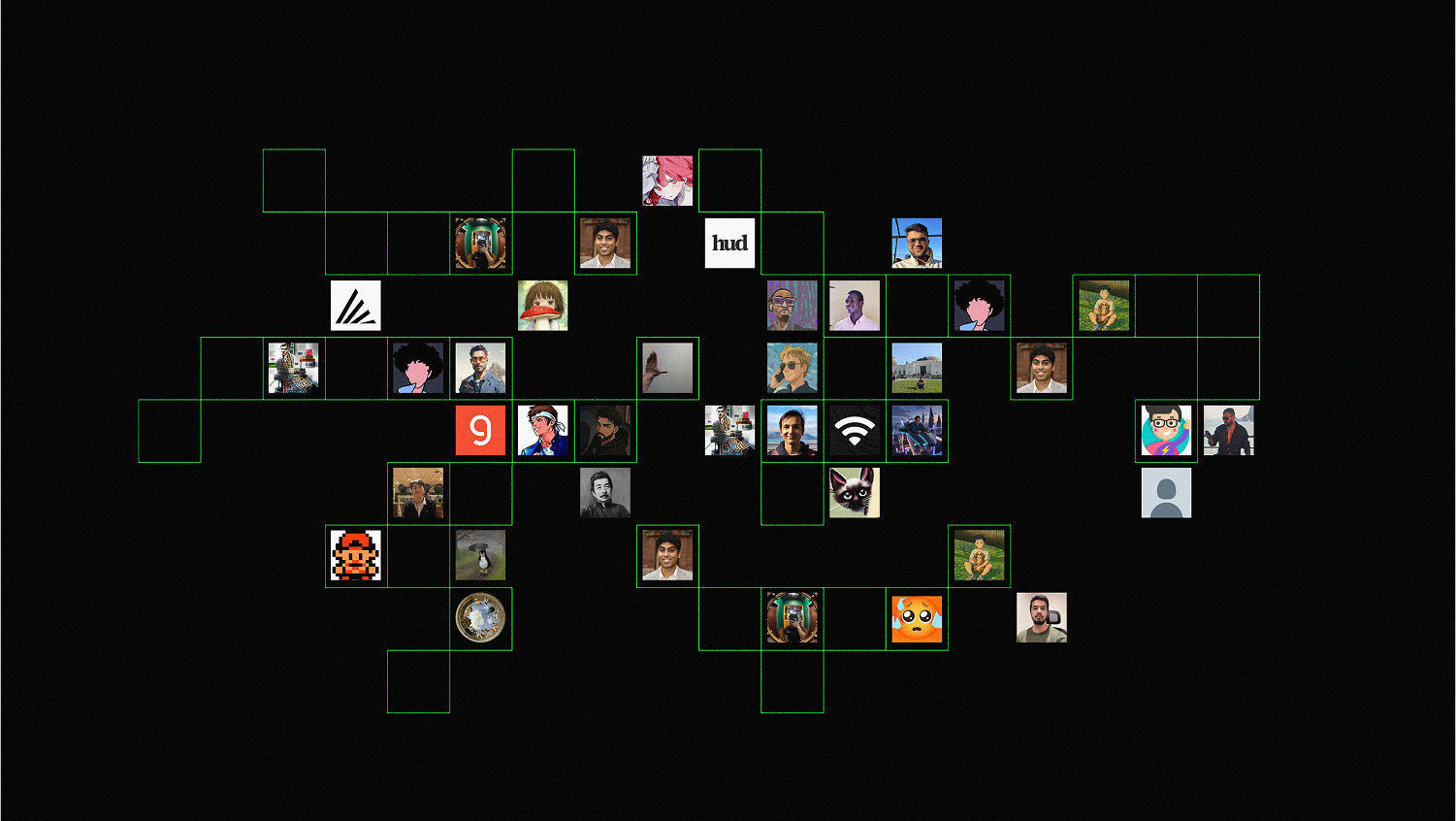

In last week’s private beta, over 30 researchers and companies contributed environments to the Hub. Starting today, we’re opening it up to everyone - building the open alternative to the closed research tooling of big labs, with infra for RL, Reinforcement Fine-tuning (RFT), compute, and inference. We want to ensure that the next wave of startups, AI progress and adoption is built on open rails and open models, and not fed into the walled gardens of big labs, ultimately entrenching their lead.

Motivation

Most current discussion around RL environments centers on a wave of startups whose business model is to build and sell them exclusively to a handful of large closed labs.

This trend poses both a serious risk and an opportunity. If high-quality environments remain expensive and closed, open-source models will fall further behind. But if a robust ecosystem of open-source environments and training tools emerges, open-source can set the state of the art.

Right now, open research lacks the tools to study many of the questions big labs consider most critical. With this release, we aim to change that. The Environment Hub, together with the RL infrastructure we’re building around it, is designed to enable the next wave of startups and AI progress to be built on open rails and open models.

Quick Links

- Environments Hub

- Environments Hub Documentation

- prime CLI

- verifiers github

- verifiers docs

- prime-rl github

Features

Developing and Sharing Environments

Create, manage and share environments for reinforcement learning and evaluation on the Environments Hub:

Evals

Create and explore evaluation reports for environments for various models.

RL Training

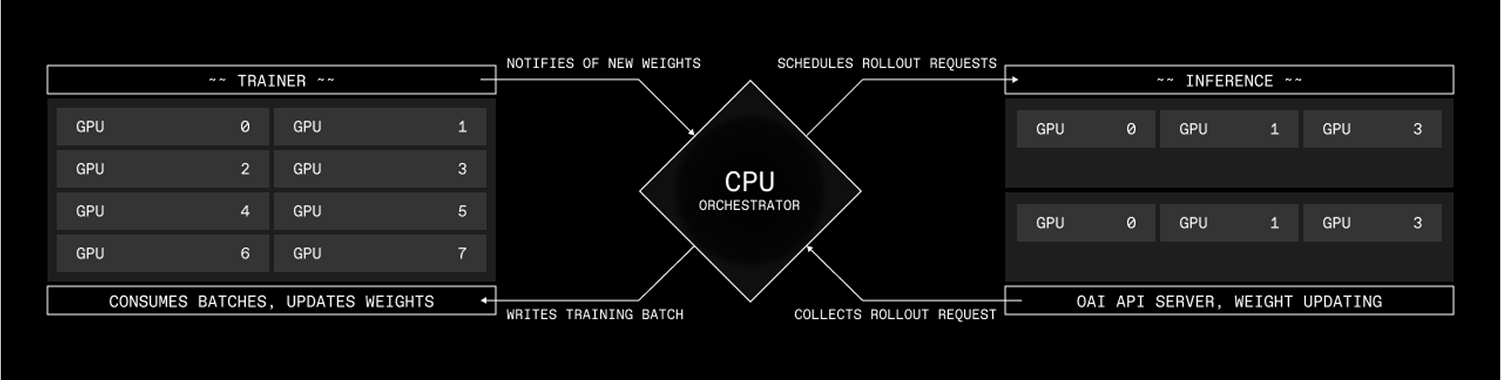

Environments are natively supported in our scalable https://github.com/PrimeIntellect-ai/prime-rl trainer.

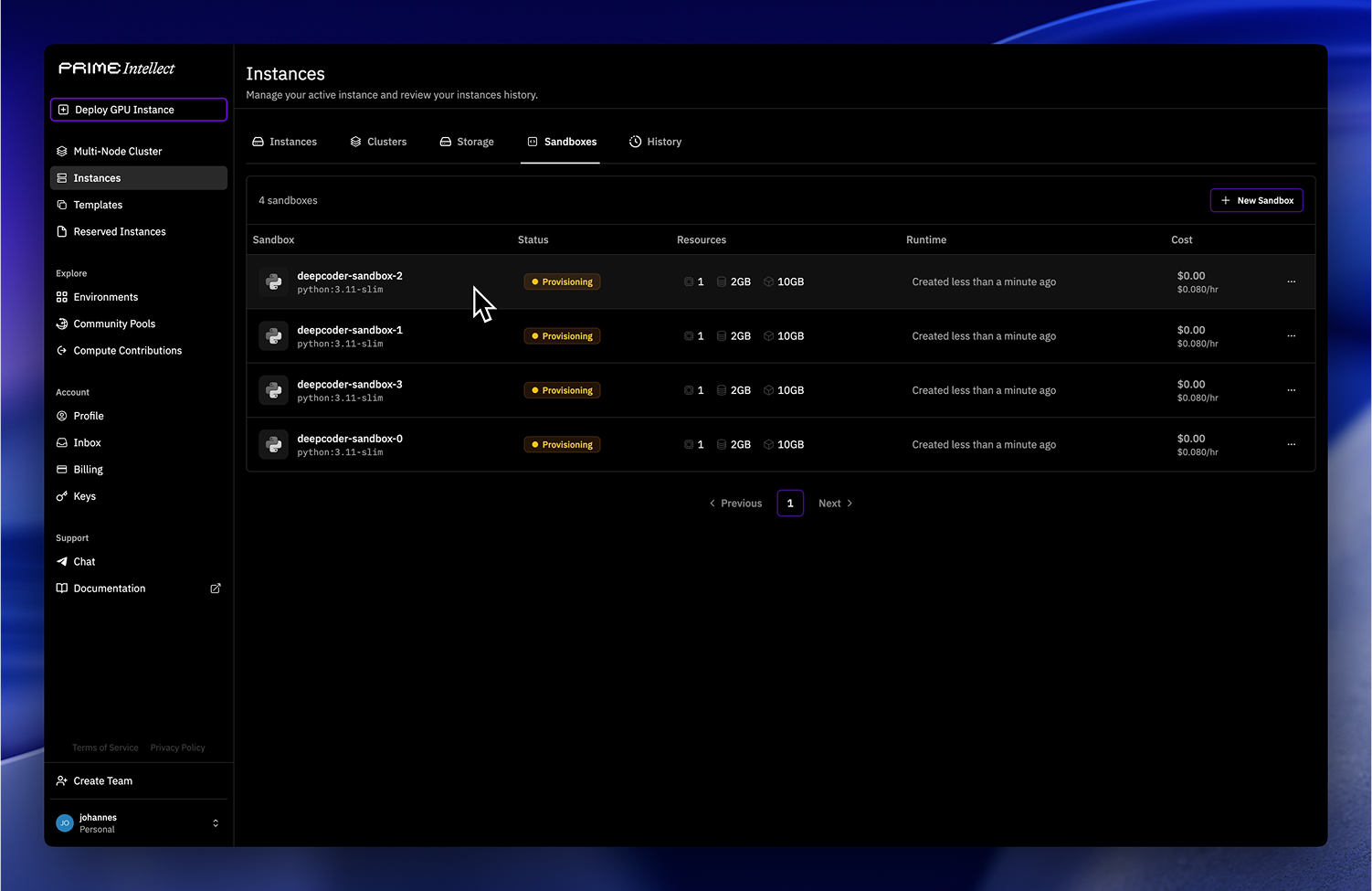

Sandboxes

We’re also launching sandboxes in beta that plug directly into Verifier Environments for secure code execution.

Contributors

A big thank you to all contributors during last week’s beta. Special shoutout to Arcee AI, Hud.so, WhyPhy Labs, Groq, and the many individuals who contributed their first environments to the hub!

With this launch, we’re also opening up a list of open and in-progress RFCs and bounties here. The tasks we’re sourcing are intentional. We want to collectively build towards a state-of-the-art open INTELLECT-3 model in agentic and coding tasks.

Let us know if you'd like to claim a lock on one (via an initial draft PR / design doc) by messaging Will or Johannes on X or opening a PR to https://github.com/PrimeIntellect-ai/prime-environments.

If you are interested in RL environments that don't have a bounty figure listed yet, just ask and we'll figure something out based on the difficulty scale we're using.

We are also opening up applications for novel environments and evals. Researchers accepted in this program will receive compute for running experiments, a stipend and support from our internal research team. Some moonshot examples of environments and evals we’d be especially excited about:

- Robust code-quality evaluations for agentic software engineering

- Evaluating usage of filesystems and memory for long-running tasks

- Adaptive coherent instruction-following for realistic multi-turn interactions

- High-quality creative writing and style adherence

- Generative generalist reward models with process critiques

- Harness and task design for interactive data science + machine learning, such as:

- Environments for NanoGPT speedrun optimizations

- Terminal-friendly data visualization

- Research plan generation, with recent notable papers as golden targets

Next Steps: Providing Full-Stack AGI Infrastructure

In the last months, we’ve made significant progress scaling agentic RL training to the largest open model sizes. With many crowdsourced environments feeding into INTELLECT-3, we are confident we can train a fully open, state-of-the-art agentic model.

Beyond INTELLECT-3, our focus with the Environments Hub is on making this infrastructure accessible to everyone: enabling researchers and startups alike to train models for their own tasks, integrate tools, run Reinforcement Fine-Tuning (RFT), and optimize agent scaffolds. Our entire stack is open-source (prime-rl), and we are extending it to run seamlessly on top of our global compute supply.

We believe RL is not only the path to AGI, but also the foundation for building AI-native products. The most successful future startups will emerge by creating novel and differentiated environments tailored to their needs. Today, the biggest barrier is not access to models - trillion-parameter agentic models already exist - but the infrastructure and cost of training and serving them at scale. By lowering this barrier, we aim to give any AI builder cheap, seamless access to compute, inference, and training and the full stack rl infrastructure, unlocking more full stack AI startups and builders.

Our goal is to provide every researcher and company with access to an open RL infrastructure stack - currently locked behind the walls of closed labs.

If you’re excited to help shape the future of a truly sovereign open-source AI ecosystem, we’d love to hear from you and invite researchers and companies to:

- Contribute: Develop environments & evals for the Environments Hub. Get Started

- Collaborate: We’re hiring engineers and researchers at the intersection of AI and distributed systems. Careers

Q&A

Join the PI discord to discuss, share feedback, and ask any questions :)

.png)